A High Availability Solution for Seamless Application Failover

In high-availability systems, data consistency during failovers or switchovers is essential to maintain application continuity. The OpenClovis Checkpointing Service (CPS) serves as a critical infrastructure component, allowing applications to synchronize runtime data, thereby ensuring seamless transitions in the event of a failover or switchover.

Key Features of CPS

The CPS enables applications to store and retrieve their internal states in real time, at regular intervals, or during a failover/switchover. Here are some of its main features:

- State Preservation: Applications can regularly save state information to quickly recover after an unexpected failure.

- Incremental Checkpoints: CPS supports incremental data checkpoints, which allow applications to save data gradually and thus reduce overhead while enhancing protection against failures.

- Non-Transparent Mode: Applications can control when checkpoints are created and accessed, giving developers flexibility over when and how data is saved and retrieved.

CPS manages checkpoints with a retention period, automatically deleting any checkpoint that hasn’t been accessed within its retention duration, which prevents data accumulation. If a process unexpectedly terminates, CPS closes all open checkpoints associated with that process.

Core Components of CPS

The CPS is built around several core entities:

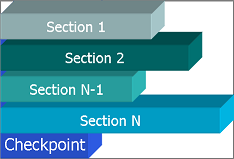

- Checkpoints: Cluster-wide elements with unique names, representing saved states of processes.

- Sections: Each checkpoint can contain several sections, each holding portions of the checkpoint data.

- Checkpoint Replicas: CPS stores checkpoint data replicas in RAM-based file systems (RAMFS) across different nodes for better performance. Replicas ensure data redundancy and availability, allowing CPS to recover data from a replica in case of a failure.

- Data Access Mechanism: Processes can access and manipulate checkpoint data through handles provided by CPS, allowing simultaneous access to different sections within a checkpoint.

CPS Architecture and Database Structure

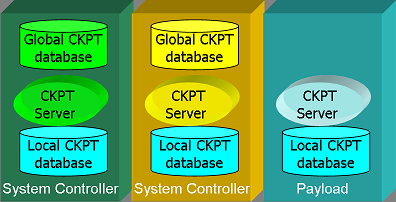

CPS’s architecture in the OpenClovis SAFplus platform includes Checkpoint Servers on each cluster node, which function as peers. The System Controller node maintains a global checkpoint database that tracks metadata for each checkpoint, ensuring each is unique across the cluster. The System Controller also ensures that each checkpoint has at least one replica to prevent data loss from node failures.

Each checkpoint server maintains a local database of checkpoint data, including the replica locations. It also handles replica synchronization, updating all replicas to ensure data consistency.

Types of Checkpoints in CPS

OpenClovis SAFplus supports two types of checkpoints:

- File-Based Checkpoints: These are stored in persistent storage, allowing data to survive across node restarts and component failures. This type provides enhanced durability but is slower than RAM-based storage.

- Server-Based Checkpoints: Stored in RAMFS for high performance, these checkpoints support failovers and component restarts. Server-based checkpoints are classified further based on the update strategy:

- Synchronous Checkpoints: Ensures all replicas are updated before control returns to the application.

- Asynchronous Checkpoints: The main replica is updated first, while other replicas receive updates asynchronously. Depending on system needs, CPS allows control over where the active replica resides:

- Collocated Checkpoints: The active replica is stored on the same node as the checkpointing process for optimized performance.

- Non-Collocated Checkpoints: CPS chooses an optimal node for the active replica, considering load balancing.

Managing Checkpoint Data Consistency and Performance

To accommodate varying performance and consistency needs, CPS provides flexible checkpoint update options:

- Synchronous Updates: Ensure that all replicas are updated before returning control, which guarantees consistency across replicas.

- Asynchronous Updates: Allow the active replica to update immediately while other replicas update asynchronously, favoring performance over strict data consistency.

Applications that prioritize performance can use collocated checkpoints, ensuring that active replicas are stored locally. Non-collocated checkpoints, on the other hand, allow CPS to manage replica locations independently, optimizing load distribution.

Persistent and Non-Persistent Data Considerations

CPS checkpoint data is stored in RAMFS, which offers high-speed access but does not persist across reboots or CPS restarts. For persistent storage, CPS can save checkpoints to a hard drive or flash memory; however, this setup requires the system integrator to manage data deletion before starting CPS to avoid conflicts.

Supporting Hot Standby with CPS

OpenClovis CPS addresses limitations in the SAF standard by offering a callback-based notification mechanism. Applications can register for updates on specific checkpoints, receiving a callback when data is updated. This feature is especially useful for hot-standby systems, where secondary components need immediate updates on state changes.

Conclusion

The OpenClovis Checkpointing Service provides a powerful, flexible solution for high-availability environments, allowing applications to manage state data effectively across failovers and switchovers. With options for checkpoint persistence, asynchronous updates, and hot-standby support, CPS offers robust tools to maintain application continuity and high performance in distributed systems.

Other support, please send email to support@openclovis.org.